Kubernetes was designed in a highly modular manner to orchestrate multiple containers for a specific purpose. In this post, I'll focus on ReplicationController, ReplicaSets, and deployments.

Kubernetes needs replication for;

Before we go in explain how to create and manage replicas let's try to explain why we need to create multiple replicated containers. Replication is used for the core purpose of;

- Reliability and Availability: When you create multiple replicated pods, you probably minimize the effects of one or more pods that fails on a host.

- Load Balancing: Running multiple pods on different nodes enables you to increase the capacity and reliability of applications. Balancing methods will prevent overloading of a single node and enables you to perform maintenance task without downtime.

- Scaling and Maintaining: Scaling becomes an important part of the application. Kubernetes enables you to easily create scale up/down scenarios that application can handle more and more requests per minute.

Kubernetes Replication

Kubernetes has multiple ways in which you can implement replication to the container base application. In this post, we will explain ReplicationControllers, ReplicaSets, and Deployments.

ReplicationController

A ReplicationControllers ensures that a specified number of pod replicas are running at any one time. In other words, a ReplicationController makes sure that a pod or a homogeneous set of pods is always up and available. You can get more detail about how a ReplicationController works from Kubernetes Documentation.

As with all other Kubernetes definition files, a ReplicationController needs apiVersion, kind, metadata and specs fields. A ReplicationController has two spec fields. The first spec fields contain ReplicationControllers information like selector, template, replicas, etc. The second one will be used to define container base information like image, name, ports, nodeselector, storage, environment, etc.

apiVersion: v1 kind: ReplicationController metadata: name: nginx # modify replicas according to your case spec: replicas: 3 selector: app: nginx template: metadata: name: nginx labels: app: nginx spec: containers: - name: nginx image: nginx ports: - containerPort: 80

In this definition file, we are looking for one selector;

- app labels must be Nginx

A simple example shows how to use kubectl command to create ReplicationControllers template file. Then you can modify this file as you need.

#kubectl run --generator=run/v1 --image=nginx web-server --port=80 --dry-run -o yaml > /tmp/web.yaml

-kubectl run --generator=run/v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

apiVersion: v1

kind: ReplicationController

metadata:

creationTimestamp: null

labels:

run: web-server

name: web-server

spec:

replicas: 1

selector:

run: web-server

template:

metadata:

creationTimestamp: null

labels:

run: web-server

spec:

containers:

- image: nginx

name: web-server

ports:

- containerPort: 80

resources: {}

status:

replicas: 0

#kubectl create -f /tmp/web.yaml replicationcontroller/web-server created #kubectl get replicationcontroller NAME DESIRED CURRENT READY AGE web-server 1 1 1 8s #kubectl get pods NAME READY STATUS RESTARTS AGE web-server-9rllk 1/1 Running 0 12s

To get the status of running ReplicationController use "describe" option of kubectl. As you can see there is only "1" pod running on the system named "web-server-9rllk".

#kubectl describe replicationcontroller web Name: web-server Namespace: default Selector: run=web-server Labels: run=web-server Annotations: <none> Replicas: 1 current / 1 desired Pods Status: 1 Running / 0 Waiting / 0 Succeeded / 0 Failed Pod Template: Labels: run=web-server Containers: web-server: Image: nginx Port: 80/TCP Host Port: 0/TCP Environment: <none> Mounts: <none> Volumes: <none> Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal SuccessfulCreate 2m17s replication-controller Created pod: web-server-9rllk

To change replicas count use "edit" option of kubectl.

#kubectl edit replicationcontroller web-server

replicationcontroller/web-server edited

#kubectl get pods

NAME READY STATUS RESTARTS AGE

web-server-95pfd 0/1 ContainerCreating 0 2s

web-server-9rllk 1/1 Running 0 6m19s

#kubectl get pods --selector=run=web-server --output=jsonpath={.items..metadata.name}

web-server-95pfd web-server-9rllk

ReplicaSets

ReplicaSets and ReplicationControllers are used for the core purpose of reliability, availability, load balancing, scaling and maintaining. ReplicaSet is the next-generation ReplicationController that supports the new set-based label selector. Basically ReplicaSets has more options on the selector field when compare ReplicationControllers.

Kubernetes recommends using Deployments instead of directly using ReplicaSets or ReplicationController.

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: nginx

spec:

replicas: 3

selector:

matchExpressions:

- {key: app, operator: In, values: [nginx]}

- {key: environment, operator: NotIn, values: [production]}

template:

metadata:

name: nginx

labels:

app: nginx

environment: production

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

In this definition file, we are looking for two different selectors;

- app labels must be Nginx

- environment label must not be production

A simple example shows how to use kubectl command to create ReplicaSets template file. Use same command to create a template file. That change it as you need.

#kubectl run --generator=run/v1 --image=nginx web-server-replicaset --port=80 --dry-run -o yaml > /tmp/web_server_rs.yaml #cat /tmp/web_server_rs.yaml apiVersion: apps/v1 kind: ReplicaSet metadata: creationTimestamp: null labels: app: web-server-replicaset name: web-server-replicaset spec: replicas: 1 selector: matchExpressions: - {key: app, operator: In, values: [web-server-replicaset]} template: metadata: creationTimestamp: null labels: app: web-server-replicaset environment: production spec: containers: - image: nginx name: web-server-replicaset ports: - containerPort: 80 resources: {} status: replicas: 0 #kubectl create -f /tmp/web_server_rs.yaml replicaset.apps/web-server-replicaset created #kubectl get rs NAME DESIRED CURRENT READY AGE web-server-replicaset 1 1 1 13s #kubectl get pods NAME READY STATUS RESTARTS AGE web-server-replicaset-ggwjr 1/1 Running 0 20s #kubectl describe rs/web-server-replicaset Name: web-server-replicaset Namespace: default Selector: app in (web-server-replicaset) Labels: app=web-server-replicaset Annotations: <none> Replicas: 1 current / 1 desired Pods Status: 1 Running / 0 Waiting / 0 Succeeded / 0 Failed Pod Template: Labels: app=web-server-replicaset environment=production Containers: web-server-replicaset: Image: nginx Port: 80/TCP Host Port: 0/TCP Environment: <none> Mounts: <none> Volumes: <none> Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal SuccessfulCreate 84s replicaset-controller Created pod: web-server-replicaset-ggwjr #kubectl edit rs/web-server-replicaset replicaset.apps/web-server-replicaset edited #kubectl get pods NAME READY STATUS RESTARTS AGE web-server-replicaset-ggwjr 1/1 Running 0 2m30s web-server-replicaset-vjwh6 0/1 ContainerCreating 0 1s #kubectl delete rs/web-server-replicaset replicaset.apps "web-server-replicaset" deleted

Deployments

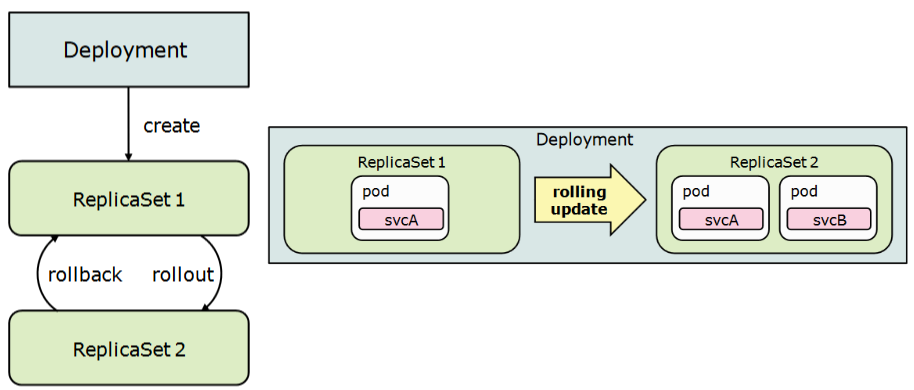

This method is the easiest and most used way to create resource for deploying applications. Deployments provide the same replication function like ReplicaSets but also additional ability like rollout and rollback if necessary. For example, if you create a deployment with one replica, it will check the desire and the current state of ReplicaSet. So if the current state is "0" then it will create ReplicaSet and Pod. Deployment creates a ReplicaSet with name <Deployment_Name>-<ReplicaSetID>. Also, Replicaset will create pods named <Deployment_Name>-<ReplicaSetID>-<PodID>.

We will use the following definition file to create a basic deployment. You can use it by copying the added definition file and save it as nginx_deployment.yaml. Use Katacoda to exercise.

#cat > nginx_deployment.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

EOF

#kubectl create -f nginx_deployment.yaml deployment.apps/nginx-deployment created #kubectl get deployment NAME READY UP-TO-DATE AVAILABLE AGE nginx-deployment 0/3 3 0 6s #kubectl get replicaset NAME DESIRED CURRENT READY AGE nginx-deployment-54f57cf6bf 3 3 3 13s #kubectl get pods NAME READY STATUS RESTARTS AGE nginx-deployment-54f57cf6bf-6k7f7 1/1 Running 0 20s nginx-deployment-54f57cf6bf-h6fbf 1/1 Running 0 20s nginx-deployment-54f57cf6bf-jhnl5 1/1 Running 0 20s

Let's go ahead and dig new attributes of deployment.

- StrategyType: Specifies the strategy used to replace old Pods by new ones. RollingUpdate or Recreate can be defined. Check Kubernetes Documentation for more details.

- RollingUpdateStrategy: You can specify maxUnavailable and maxSurge to control the rolling update process

- MinReadySeconds: The minimum number of seconds for which a newly created Pod should be ready without any of its containers crashing, for it to be considered available. The default value is "0".

#kubectl describe deployment nginx-deployment Name: nginx-deployment Namespace: default CreationTimestamp: Sun, 12 Jan 2020 19:17:11 +0000 Labels: app=nginx Annotations: deployment.kubernetes.io/revision: 1 Selector: app=nginx Replicas: 3 desired | 3 updated | 3 total | 3 available | 0 unavailable StrategyType: RollingUpdate MinReadySeconds: 0 RollingUpdateStrategy: 25% max unavailable, 25% max surge Pod Template: Labels: app=nginx Containers: nginx: Image: nginx:1.7.9 Port: 80/TCP Host Port: 0/TCP Environment: <none> Mounts: <none> Volumes: <none> Conditions: Type Status Reason ---- ------ ------ Available True MinimumReplicasAvailable Progressing True NewReplicaSetAvailable OldReplicaSets: <none> NewReplicaSet: nginx-deployment-54f57cf6bf (3/3 replicas created) Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 2m10s deployment-controller Scaled up replica set nginx-deployment-54f57cf6bf to 3

Time to test your skills. Use Katacoda and find out the answers.

Question 1: Create a namespace called casesup?

#kubectl create namespace casesup namespace/casesup created #kubectl describe namespace casesup Name: casesup Labels:Annotations: Status: Active No resource quota. No LimitRange resource.

Question 2: Use casesup namespcae and create a replicationcontroller. You can use busybox image to create pod. Also get the pods IP and log it.

#kubectl run --generator=run/v1 --image=busybox busy-replicationcontroller --dry-run -o yaml > /tmp/replicationcontroller.yaml #cat /tmp/replicationcontroller.yaml apiVersion: v1 kind: ReplicationController metadata: namespace: casesup creationTimestamp: null labels: run: busy-replicationcontroller name: busy-replicationcontroller spec: replicas: 1 selector: run: busy-replicationcontroller template: metadata: creationTimestamp: null labels: run: busy-replicationcontroller spec: containers: - image: busybox command: ["/bin/sh"] args: ["-c", "while true; do echo $(ip a|grep inet|grep global|awk '{print $2}');sleep 2; done"] name: busy-replicationcontroller resources: {} status: replicas: 0 #kubectl create -f /tmp/replicationcontroller.yaml #kubectl get replicationcontroller -n casesup NAME DESIRED CURRENT READY AGE busy-replicationcontroller 1 1 1 4m22s #kubectl get pods -n casesup NAME READY STATUS RESTARTS AGE busy-replicationcontroller-x2pb6 1/1 Running 0 4m33s #kubectl logs busy-replicationcontroller-x2pb6 -n casesup 172.18.0.7/24 172.18.0.7/24 172.18.0.7/24 172.18.0.7/24 172.18.0.7/24 172.18.0.7/24 172.18.0.7/24 172.18.0.7/24 172.18.0.7/24 -Use "edit" option to modify ReplicationControllers #kubectl edit replicationcontroller busy-replicationcontroller

Question 3: Use casesup namespcae and create a ReplicaSets. You can use busybox image to create pod. ReplicaSets selector options will be "app=busybox environment=test"

#kubectl run --generator=run/v1 --image=busybox busy-replicationcontroller --dry-run -o yaml > /tmp/replicasets.yaml kubectl run --generator=run/v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead. -Change some parameters #vi /tmp/replicasets.yaml #cat /tmp/replicasets.yaml apiVersion: apps/v1 kind: ReplicaSet metadata: namespace: casesup labels: app: busybox name: busy-replicasets spec: replicas: 2 selector: matchExpressions: - {key: app, operator: In, values: [busybox]} - {key: environment, operator: In, values: [test]} template: metadata: creationTimestamp: null labels: app: busybox environment: test spec: containers: - image: busybox command: ["/bin/sh"] args: ["-c", "while true; do echo $(ip a|grep inet|grep global|awk '{print $2}');sleep 2; done"] name: busy-replicasets resources: {} status: replicas: 0 #kubectl create -f /tmp/replicasets.yaml replicaset.apps/busy-replicasets created #kubectl get replicasets -n casesup NAME DESIRED CURRENT READY AGE busy-replicasets 2 2 2 6s #kubectl get pods -n casesup NAME READY STATUS RESTARTS AGE busy-replicasets-q8rs4 1/1 Running 0 11s busy-replicasets-qjvk6 1/1 Running 0 11s

Question 4: Use busybox-deployment that you created before. Change container port and add "change-cause" to the deployment config. Use "kubectl get deployment" command to figure out how pods will terminates. Perform a rollback operation for the busybox-deployment.

--You can add "kubernetes.io/change-cause: port changed from 8080 to 80" to the metadata of deployment definition file. Also change port from 80 to 8080 from container port. #kubectl edit deployment busybox-deployment -n casesup deployment.extensions/busybox-deployment edited #kubectl get pods -n casesup NAME READY STATUS RESTARTS AGE busybox-deployment-6c8769f9df-kpwfk 1/1 Running 0 6m43s busybox-deployment-6c8769f9df-ltgl2 1/1 Running 0 6m49s busybox-deployment-6c8769f9df-nmw6x 1/1 Running 0 6m46s busybox-deployment-7f656ff6bf-b6c4w 0/1 ContainerCreating 0 2s #kubectl get pods -n casesup NAME READY STATUS RESTARTS AGE busybox-deployment-6c8769f9df-kpwfk 1/1 Running 0 6m44s busybox-deployment-6c8769f9df-ltgl2 1/1 Running 0 6m50s busybox-deployment-6c8769f9df-nmw6x 1/1 Running 0 6m47s busybox-deployment-7f656ff6bf-b6c4w 0/1 ContainerCreating 0 3s #kubectl get pods -n casesup NAME READY STATUS RESTARTS AGE busybox-deployment-6c8769f9df-kpwfk 1/1 Terminating 0 6m45s busybox-deployment-6c8769f9df-ltgl2 1/1 Running 0 6m51s busybox-deployment-6c8769f9df-nmw6x 1/1 Running 0 6m48s busybox-deployment-7f656ff6bf-46mwg 0/1 ContainerCreating 0 1s busybox-deployment-7f656ff6bf-b6c4w 1/1 Running 0 4s #kubectl get pods -n casesup NAME READY STATUS RESTARTS AGE busybox-deployment-6c8769f9df-kpwfk 1/1 Terminating 0 6m54s busybox-deployment-6c8769f9df-ltgl2 1/1 Terminating 0 7m busybox-deployment-6c8769f9df-nmw6x 1/1 Terminating 0 6m57s busybox-deployment-7f656ff6bf-46mwg 1/1 Running 0 10s busybox-deployment-7f656ff6bf-b6c4w 1/1 Running 0 13s busybox-deployment-7f656ff6bf-dwk7v 1/1 Running 0 7s #kubectl rollout history deployment/busybox-deployment -n casesup deployment.extensions/busybox-deployment REVISION CHANGE-CAUSE 2 port changed from 8080 to 80 -Performed rollback operation #kubectl rollout undo deployment busybox-deployment -n casesup deployment.extensions/busybox-deployment rolled back #kubectl get pods -n casesup NAME READY STATUS RESTARTS AGE busybox-deployment-6c8769f9df-2nbz7 1/1 Running 0 8s busybox-deployment-7f656ff6bf-46mwg 1/1 Running 0 2m36s busybox-deployment-7f656ff6bf-b6c4w 1/1 Running 0 2m39s busybox-deployment-7f656ff6bf-dwk7v 1/1 Terminating 0 2m33s #kubectl get pods -n casesup NAME READY STATUS RESTARTS AGE busybox-deployment-6c8769f9df-2nbz7 1/1 Running 0 12s busybox-deployment-6c8769f9df-vqtxr 0/1 ContainerCreating 0 4s busybox-deployment-7f656ff6bf-46mwg 1/1 Running 0 2m40s busybox-deployment-7f656ff6bf-b6c4w 1/1 Running 0 2m43s busybox-deployment-7f656ff6bf-dwk7v 1/1 Terminating 0 2m37s