Kubernetes has become the de-facto standard for the container orchestration to automate application deployment, scaling, and management after Google published the project in 2014. However, the power of technology that Kubernetes uses also brings a great deal of complexity. Kubernetes is a live platform that rapidly evolving new software, with new features being added and bug being fixed regularly.

Many organizations are new to Kubernetes and its ecosystem. Migrating applications and compute to the cloud-based platform could be a new challenge even for the most technical professionals. However, this is a technology transformation that Microservice architecture forces.

The inherent complexity of Kubernetes leads to an outdated version of a misconfigured platform that making the cluster less secure or susceptible. Although some security mechanisms are already included by the default at the design level, Kubernetes does not force security settings in many installation steps. Protecting a K8s cluster is undertaken, requiring both a deep understanding of the underlying technology and engineering expertise to manage them.

Kubernetes Security:

- Cluster Security

- Select Container Engine and Runtime

- Security of Container Hosts

- Use Native Container Security Features

- Logging, Auditing, and Monitoring

Cluster Security

Standardize on a Platform

Standardizing on one K8s platform not only affects K8s operations but also reduces the security risks related to vulnerabilities in the K8s software. Organizations are commonly adopting enterprise distributions, such as Red Hat OpenShift, VMware Enterprise PKS, and Rancher. However, a few companies have the flexibility to deploy and manage vanilla K8s with their IT resources.

K8s Platforms:

- Self-managed Kubernetes: The organization has the flexibility to deploy and manage vanilla K8s with its IT resources. ( CNCF Upstream, Rancher Kubernetes Engine, Enterprise Pivotal Container Service, OpenShift Container Platform)

- Provider-managed Kubernetes: The organization consumes Kubernetes as a service that aims to reduce the workload on control plane components so can focus on other operations and deployment of workload. ( Amazon Elastic Kubernetes Services, Red Hat OpenShift on IBM Cloud)

- Serverless Kubernetes: The organization consumes Kubernetes as a service that aims to reduce the workload on the control plane and runtime components.( Amazon EKS on AWS Fargate, Google Cloud Run on GKE)

When choosing between these modalities, we need to assess the company’s requirements, budgets, capabilities, and flexibilities. If the company opts for self-managed Kubernetes, does it has the needed time and skill required to maintain, deploy, and manage the K8s cluster? Security concerns, definitely worth considering while choosing between these modalities.

Select Container Engine and Runtime

Secure container runtimes reduce the risk associated with container runtime attacks. In many cases, the choice of container runtime and the engine will depend on the K8s distribution. CNCF containerd includes several runtimes, such as Docker, Podman, etc. The ability to customize container engines and runtimes can create uniqueness across deployed K8s clusters. There are two main attacks type for the container runtime; container breakouts and privilege escalations. The vulnerability (CVE-2019-5736) allowed an attacker to execute a command as root, overwrite container runtime and obtain root access to the host and then cluster. What could be worse than this?

There are some approaches to restrict this kind of illegal activity;

- gVisor runtime: Google offers this service to restrict some kind of syscall and functions.

- Kata runtime: Run container runtimes in restricted virtual machines to provide additional isolation from the cluster.

- KubeVirt: Run workloads in a K8s cluster as a container.

Security of Container Hosts

The Container Hosts also must be in a secure state and well-defined configuration to reduce operating systems based attacks. Master and worker nodes must be secured which installed on the virtualization platform or bare-metal. If you introduce a known vulnerable service on a container host or within a container workload, you create another attack vector that can compromise the Kubernetes cluster. So, the traditional operating systems security best practice should be applied that aims to reduce services and open ports that attackers can use.

To reduce potential risk, consider lightweight operating systems or a container/K8s-optimized OS;

- Alpine Linux

- Fedora CoreOS

- Mesosphere DC/OS

- Rancher K3s (for IoT and edge use cases)

- Rancher RancherOS

- VMware Photon OS

Use Native Container Security Features

K8s has many native security mechanisms to prevent workload compromise such as namespace, unprivileged users, container limitation, minimal official base image, discretionary access control, SELinux, AppArmor, node affinity, anti-affinity, etc.

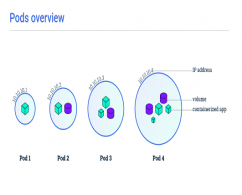

Namespaces are critical key components of Kubernetes security, and many K8s role-based-access control (RBAC) rules map to them. Namespaces are used to define project scope within a cluster, create fine-grained access controls, and implement many of the native K8s network security and workload/pod security controls. It is not possible to talk about security without considering Namespaces in Kubernetes.

Namespace doesn't restrict access to physical resources like CPU, memory, disk. These types of resources are restricted by a feature called Cgroups on Linux kernel. You can read more about Cgroups from RedHat documentation. Check this link for more detail about Cgroups and namespaces.

Other Container Security Fundamentals;

-

Run containers as a user (unprivileged)

-

Limit the ability for containers to escalate privileges

-

Use minimal and hardened base images with no extra tools

-

Leverage container benchmarks

Node affinity also can be useful to control which application pods can run on which cluster-specific hosts. This native feature will allow us to isolate some application pods to run on specific hosts to restrict them from others.

Check the link to read more.

Logging, Auditing, and Monitoring

Always design logging, auditing, and monitoring procedures become the most critical task for platform security and operational perfectionism.

Regular log collection and monitoring are critical to understanding the nature of security and service availability during an active investigation and security breaches. Logs are useful for establishing a baseline, identify potential risk, and identify the operational anomaly. In some cases, an effective auditing logging and monitoring platform can be the difference between low and high impact security cases.

“However, the native functionality provided by a container engine or runtime is usually not enough for a complete logging solution. For example, if a container crashes, a pod is evicted, or a node dies, you'll usually still want to access your application's logs. As such, logs should have separate storage and lifecycle independent of nodes, pods, or containers. This concept is called cluster-level-logging. Cluster-level logging requires a separate backend to store, analyze, and query logs. Kubernetes provides no native storage solution for log data, but you can integrate many existing logging solutions into your Kubernetes cluster.”

The detailed logging methodologies are explained in Kubernetes' documentation.

Kubernetes’ Logging Methodologies:

- cluster-level logging

- Sidecar containers in pods

- Direct integration to a logging back end

https://www.marcolancini.it/2020/blog-kubernetes-threat-modelling/

https://logz.io/blog/a-practical-guide-to-kubernetes-logging/

https://kubernetes.io/docs/concepts/cluster-administration/logging/